Are “free” AI tools really free?

There is an age-old argument that if something is free, then you are the product.

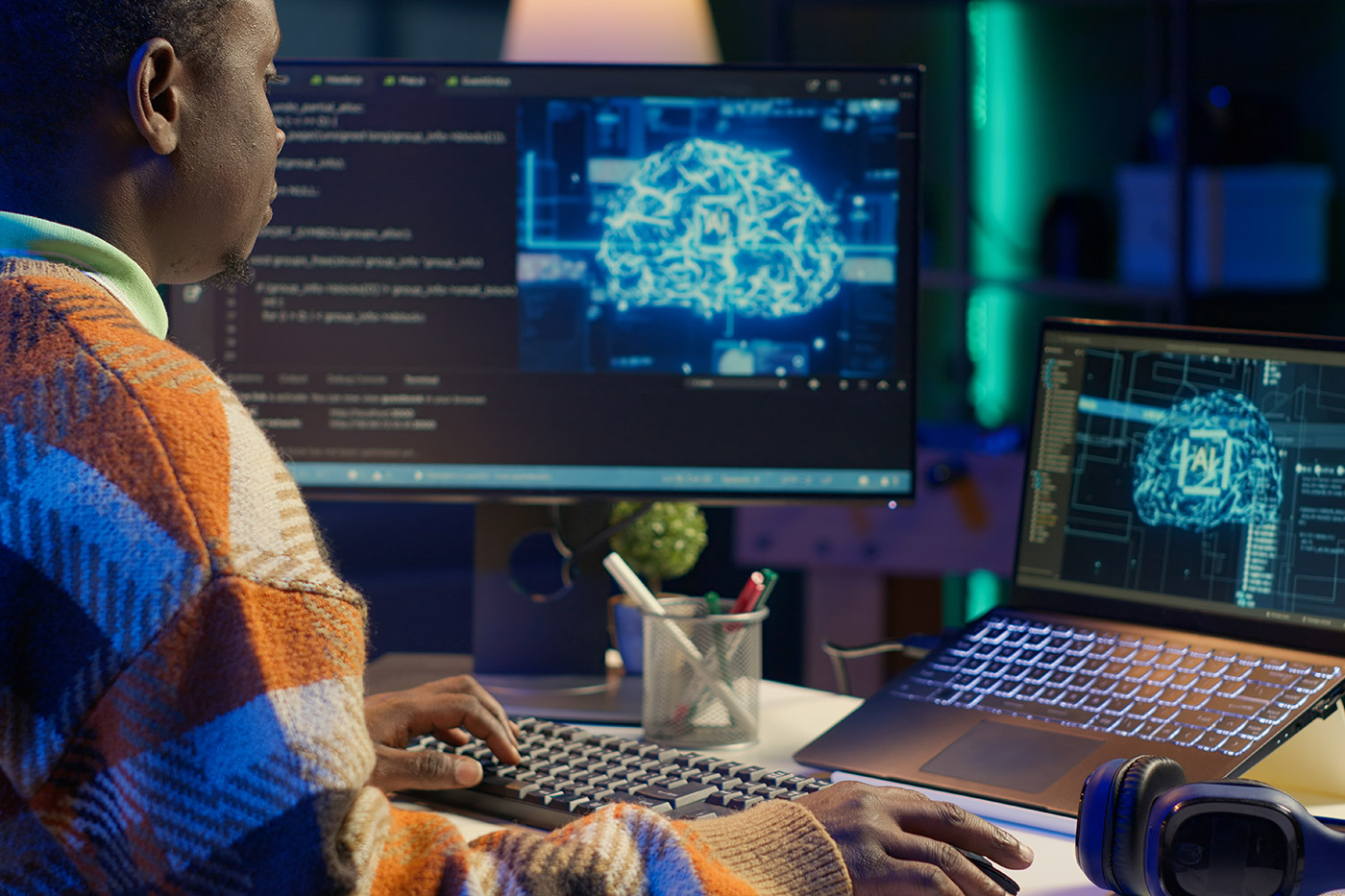

This holds particularly true for artificial intelligence (AI) tools available at no cost. You only have to spend 10 minutes on the website ’There’s an AI for That’ to see the risks that fragmented AI usage can pose to your organisation.

Just as social media platforms collect user data to tailor advertisements, many free AI tools hold the right to monitor and gather user inputs in ways that may not be transparent. When businesses input sensitive or proprietary information into these platforms, therefore, they may unknowingly expose their data.

Many free AI models explicitly state in their terms that user inputs can be stored and used for training purposes.

For instance, OpenAI’s free version of ChatGPT reserves the right to use user interactions to enhance its models. This means confidential business strategies, customer data, or internal communications could be inadvertently shared and stored.

A stark reminder of these risks came recently when DeepSeek, a popular AI tool, was compromised within days of it going public to users.

When using an AI tool, businesses must consider the potential fallout of a data breach—which can range from severe and lasting reputational damage to hefty regulatory penalties.

Why Microsoft Copilot?

Back in 2013, business owners were dealing with the shadow IT risks of Dropbox, Google and many other free tools that employees started using before IT teams could catch up. The solution to a lot of this was paid versions of these tools that provided the admin capabilities for businesses to control their data, especially when employees or contractors leave.

The same is now true of AI. If your staff are out there creating accounts with free AI services that IT, or you, have no control over, your data is at risk.

For example, there are AI solutions provided by reputable vendors that offer enterprise-grade security, clear data policies, and compliance with regulatory standards, with Microsoft Copilot being just one of them. Microsoft Copilot’s paid version also comes with Enterprise Data Protection for prompts and responses.

In addition to managing employee AI usage, Microsoft’s tools like Purview help track and troubleshoot AI-generated outputs within your organisation, allowing organisations to respond effectively to any Subject Access Requests relating to AI.

Whatever you decide, ensuring that AI tools are used responsibly and securely must be central to your IT strategy and guidance for your team.

If you’re unsure how to navigate this, let’s talk—I can help as your vCIO.

RELATED RESOURCES

How to Use Password Managers Safely: A Practical Guide

With the ever-growing number of online accounts we manage, password security has never been more important. Weak or reused passwords constitute a serious cybersecurity risk, making it easier for hackers…

Stay Vigilant: New Wave of Phishing Attacks Exploiting Microsoft Teams

There has been a significant increase in highly targeted phishing attacks exploiting Microsoft Teams. Cybercriminals are using increasingly sophisticated tactics to deceive users and compromise businesses….

The security risks of old IT infrastructure

AI is revolutionizing businesses, offering new tools and solutions that boost productivity. But with the rise of AI, a hidden issue has emerged: shadow AI….